Rain and Fog Got You Down? Lidar Clears the Way for Safer Intelligent Driving

In May this year, an FSD Tesla vehicle failed to detect a train in front of it in heavy fog. At that moment, the driver made an emergency turn and avoided crashing into the train.

Seeing Through Fog Is a Pain Point for Both Human and Intelligent Driving

Fog greatly reduces visibility. A driving system’s judgment of road conditions decreases significantly once vision becomes blurred. As light weakens in fog, only light reflected off objects a short distance away will reach the vehicle. For example, visibility in heavy fog is less than 50 meters. Once visible distance becomes reduced, the system’s judgment of distance does not match reality, making a collision with the car in front more likely.

Statistical data shows that 30% of traffic accidents are due to bad weather and 70% of accidents on freeways occur during foggy weather.

Algorithmic Testing Shows That Lidar Still Achieves Reliable Detection on Foggy Days

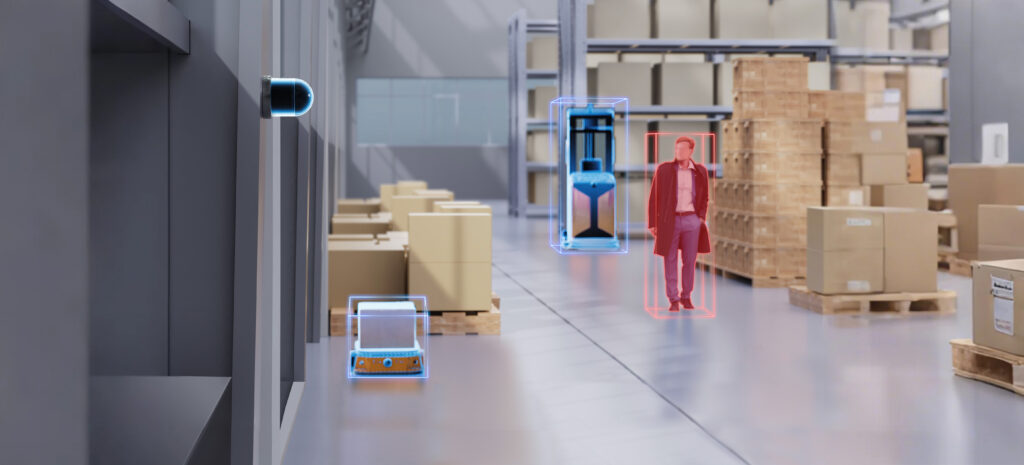

Unlike ordinary detection, lidar continues to work in mild to moderately foggy environments and provides vehicles with reliable sensory data.

Hesai’s Maxwell Intelligent Manufacturing Center has built a specialized rain & fog testing track for lidar point cloud testing. The test results reveal the following:

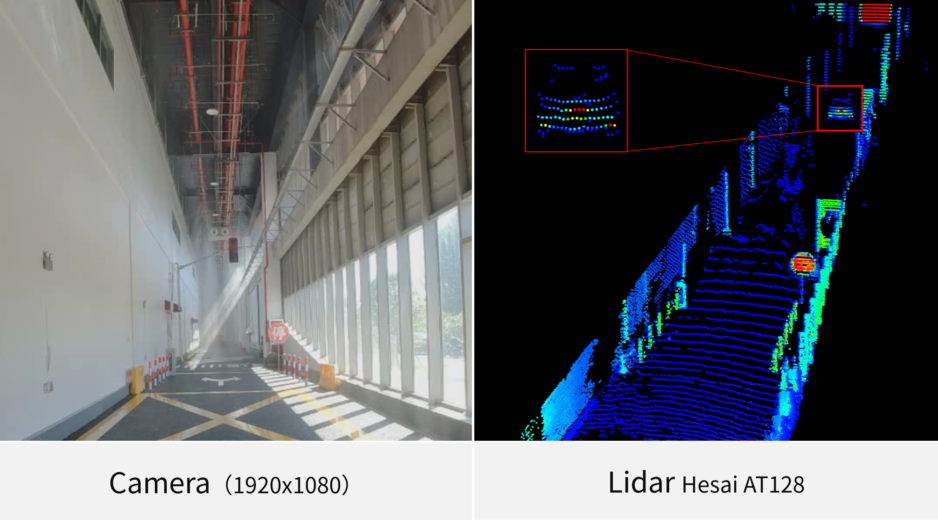

- Under equivalent foggy conditions, the lidar point cloud still clearly shows the vehicle ahead at a distance of 50 meters when the camera has difficulty identifying it. The valid data points far exceed the requirements of algorithmic detection.

At the same time, a large number of Hesai’s real road tests show that lidar can still reliably sense important targets such as vehicles and pedestrians, even when its ranging capability is somewhat reduced on mild to moderately rainy and foggy days. Lidar performance in rain and fog at mid to close range is almost equivalent to its performance on clear days. When the picture quality of the camera decreases, or the main frame is lost due to window occlusion, lidar continues to output high-quality data which supplements that of the camera, making it an important system security guard.

Why Does Lidar Make Driving in Fog Safer?

Lidar Actively Emits Light to Achiever Longer Range of Vision

Fog is made up of water droplets in the air that absorb and scatter light. When light travels through rain and fog, it gets absorbed and scattered in different directions. This not only reduces the amount of sunlight reaching the ground but also significantly diminishes the light reflected from surfaces. By the time this light passes through the layers of rain and fog and reaches the human eye or car cameras, it has weakened to the point where it is insufficient to see objects. Foggy conditions typically occur in the early morning and at night when lighting is already poor, posing a huge problem to the vehicle’s sensing capabilities.

While still being safe for the human eye, the optical power density of lidar reaching objects 100 meters away is equivalent to about seven times that of visible light. This means that lidar maintains high-quality detection performance, even in poor weather conditions. What’s particularly valuable is that the invisible light emitted by lidar does not cause visual disturbances to the human eye as visible light does. Instead, it quietly safeguards each journey, providing better safety for vehicles.

A Large “Aperture” Design Opens the Way for Photons

On foggy days, another problem that sensors face is condensation on the sensor windows. This affects the transmission of light, leading to poorer detection.

Due to small optical apertures, even a single drop of water can sometimes block large areas of a vehicle-mounted camera lens’s field of view, obstructing key information. Therefore, vehicle manufacturers need to develop cleaning system solutions to prevent camera lenses from accumulating water and dirt.

Lidar uses larger optical apertures, which ensure that more light gets in, greatly reducing the impact of light being obscured by water droplets on windows. Even if lidar’s ranging capability declines, the data it gets will not be lost due to water droplets, and it will continue to reliably detect vehicles and pedestrians on the road.

Water droplets on windows cause data loss over a large area of the camera

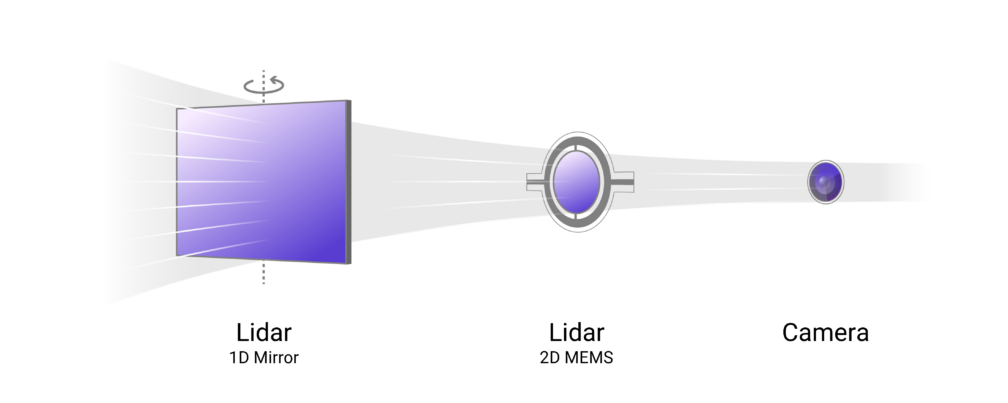

It’s worth mentioning that Hesai’s AT series products use a rotating mirror solution. The optical apertures of these mirrors are much larger than those of MEMS mirrors, which means they have a greater advantage when it comes to rain and fog.

Comparison of lidar and camera lens apertures

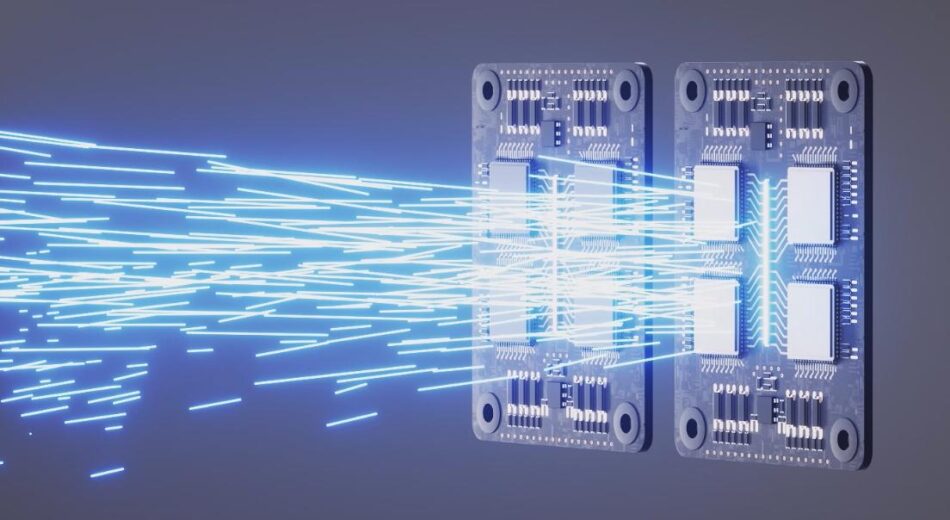

Single-Photon Detectors: Dedicated to Capturing Every Returning Photon

Once light passes through the aperture, it still needs to be captured by the detector in the final stage of the detection process before a data signal can be successfully sent to the processor. At this point, it’s all about the detector’s capability to capture photons. Hesai’s lidar delivered for series production intelligent vehicles uses advanced single-photon detectors that generate effective data signals with just a few photons, ensuring that a vehicle’s sensing capabilities remain consistent in all manner of scenarios.

Hesai’s ADAS products utilize single-photon detectors

Better Lidar Ranging Capability Means Stronger Rain & Fog Penetration

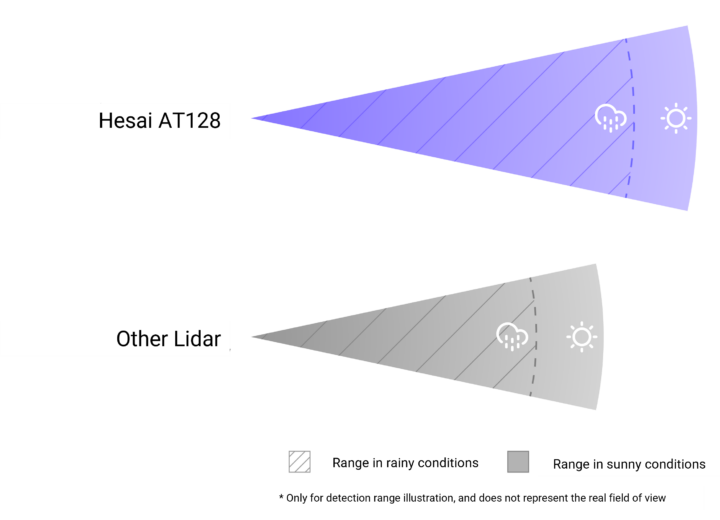

In summary, since rain and fog reduce lidar’s ranging capability, only lidar with stronger ranging capabilities under standard conditions can ensure an extended detection range in rainy and foggy weather. In this respect, a standard-ranging capability with 10% reflectivity is an important criterion for evaluating the ability of lidar to penetrate rain and fog. Lidar with 10% reflectivity at 200 meters improves performance by one-third compared to lidar with 10% reflectivity at 150 meters. This allows the intelligent driving system’s sensing capabilities to be used for maximum effect in rain and fog and maintains driving safety in all weather and scenarios.

Will Be Here Soon — Improving Ranging Capabilities in All Scenarios is a Growing Trend

L2 driving has become more widespread, lidar has “crossed the chasm” with a penetration rate of 20.5% out of 150,000 EVs as of May this year.

In 2024, the introduction of L3 autonomous driving sets the stage for new demands in the automotive perception field. Future lidar systems must not only deliver higher performance but also acquire new “skills” like penetrating rain and fog and filtering out noise, to extend L3 applications across a broader range of weather conditions.

References:

1. https://www.youtube.com/watch?v=h4f-crzpZ9w

2. https://baijiahao.baidu.com/s?id=1770109707521802538&wfr=spider&for=pc

3. Calculated based on the optical power density of the object illuminated by sunlight on a sunny day and the peak optical power density of the object illuminated by a typical long-range lidar at 100 meters.

4. Data from Gaogong Research Lab.